[Jump here for more technical details.]

A few months ago, I installed BuddyPress on my Mac to try it out. It was a bit of an involved process, so I documented it:

WordPress MU, BuddyPress, and bbPress on Local Machine « Disparate.

More recently, I decided to get a webhost. Both to run some tests and, eventually, to build something useful. BuddyPress seems like a good way to go at it, especially since it’s improved a lot, in the past several months.

In fact, the installation process is much simpler, now, and I ran into some difficulties because I was following my own instructions (though adapting the process to my webhost). So a new blogpost may be in order. My previous one was very (possibly too) detailed. This one is much simpler, technically.

One thing to make clear is that BuddyPress is a set of plugins meant for WordPress µ (“WordPress MU,” “WPMU,” “WPµ”), the multi-user version of the WordPress blogging platform. BP is meant as a way to make WPµ more “social,” with such useful features as flexible profiles, user-to-user relationships, and forums (through bbPress, yet another one of those independent projects based on WordPress).

While BuddyPress depends on WPµ and does follow a blogging logic, I’m thinking about it as a social platform. Once I build it into something practical, I’ll probably use the blogging features but, in a way, it’s more of a tool to engage people in online social activities. BuddyPress probably doesn’t work as a way to “build a community” from scratch. But I think it can be quite useful as a way to engage members of an existing community, even if this engagement follows a blogger’s version of a Pareto distribution (which, hopefully, is dissociated from elitist principles).

But I digress, of course. This blogpost is more about the practical issue of adding a BuddyPress installation to a webhost.

Webhosts have come a long way, recently. Especially in terms of shared webhosting focused on LAMP (or PHP/MySQL, more specifically) for blogs and content-management. I don’t have any data on this, but it seems to me that a lot of people these days are relying on third-party webhosts instead of relying on their own servers when they want to build on their own blogging and content-management platforms. Of course, there’s a lot more people who prefer to use preexisting blog and content-management systems. For instance, it seems that there are more bloggers on WordPress.com than on other WordPress installations. And WP.com blogs probably represent a small number of people in comparison to the number of people who visit these blogs. So, in a way, those who run their own WordPress installations are a minority in the group of active WordPress bloggers which, itself, is a minority of blog visitors. Again, let’s hope this “power distribution” not a basis for elite theory!

Yes, another digression. I did tell you to skip, if you wanted the technical details!

I became part of the “self-hosted WordPress” community through a project on which I started work during the summer. It’s a website for an academic organization and I’m acting as the organization’s “Web Guru” (no, I didn’t choose the title). The site was already based on WordPress but I was rebuilding much of it in collaboration with the then-current “Digital Content Editor.” Through this project, I got to learn a lot about WordPress, themes, PHP, CSS, etc. And it was my first experience using a cPanel- (and Fantastico-)enabled webhost (BlueHost, at the time). It’s also how I decided to install WordPress on my local machine and did some amount of work from that machine.

But the local installation wasn’t an ideal solution for two reasons: a) I had to be in front of that local machine to work on this project; and b) it was much harder to show the results to the person with whom I was collaborating.

So, in the Fall, I decided to get my own staging server. After a few quick searches, I decided HostGator, partly because it was available on a monthly basis. Since this staging server was meant as a temporary solution, HG was close to ideal. It was easy to set up as a PayPal “subscription,” wasn’t that expensive (9$/month), had adequate support, and included everything that I needed at that point to install a current version of WordPress and play with theme files (after importing content from the original site). I’m really glad I made that decision because it made a number of things easier, including working from different computers, and sending links to get feedback.

While monthly HostGator fees were reasonable, it was still a more expensive proposition than what I had in mind for a longer-term solution. So, recently, a few weeks after releasing the new version of the organization’s website, I decided to cancel my HostGator subscription. A decision I made without any regret or bad feeling. HostGator was good to me. It’s just that I didn’t have any reason to keep that account or to do anything major with the domain name I was using on HG.

Though only a few weeks elapsed since I canceled that account, I didn’t immediately set out to transition to a new webhost. I didn’t go from HostGator to another webhost.

But having my own webhost still remained at the back of my mind as something which might be useful. For instance, while not really making a staging server necessary, a new phase in the academic website project brought up a sandboxing idea. Also, I went to a “WordPress Montreal” meeting and got to think about further WordPress development/deployment, including using BuddyPress for my own needs (both as my own project and as a way to build my own knowledge of the platform) instead of it being part of an organization’s project. I was also thinking about other interesting platforms which necessitate a webhost.

(More on these other platforms at a later point in time. Bottom line is, I’m happy with the prospects.)

So I wanted a new webhost. I set out to do some comparison shopping, as I’m wont to do. In my (allegedly limited) experience, finding the ideal webhost is particularly difficult. For one thing, search results are cluttered with a variety of “unuseful” things such as rants, advertising, and limited comparisons. And it’s actually not that easy to give a new webhost a try. For one thing, these hosting companies don’t necessarily have the most liberal refund policies you could imagine. And, switching a domain name between different hosts and registrars is a complicated process through which a name may remain “hostage.” Had I realized what was involved, I might have used a domain name to which I have no attachment or actually eschewed the whole domain transition and just try the webhost without a dedicated domain name.

Doh!

Live and learn. I sure do. Loving almost every minute of it.

At any rate, I had a relatively hard time finding my webhost.

I really didn’t need “bells and whistles.” For instance, all the AdSense, shopping cart, and other business-oriented features which seem to be publicized by most webhosting companies have no interest, to me.

I didn’t even care so much about absolute degree of reliability or speed. What I’m to do with this host is fairly basic stuff. The core idea is to use my own host to bypass some limitations. For instance, WordPress.com doesn’t allow for plugins yet most of the WordPress fun has to do with plugins.

I did want an “unlimited” host, as much as possible. Not because expect to have huge resource needs but I just didn’t want to have to monitor bandwidth.

I thought that my needs would be basic enough that any cPanel-enabled webhost would fit. As much as I could see, I needed FTP access to something which had PHP 5 and MySQL 5. I expected to install things myself, without use of the webhost’s scripts but I also thought the host would have some useful scripts. Although I had already registered the domain I wanted to use (through Name.com), I thought it might be useful to have a free domain in the webhosting package. Not that domain names are expensive, it’s more of a matter of convenience in terms of payment or setup.

I ended up with FatCow. But, honestly, I’d probably go with a different host if I were to start over (which I may do with another project).

I paid 88$ for two years of “unlimited” hosting, which is quite reasonable. And, on paper, FatCow has everything I need (and I bunch of things I don’t need). The missing parts aren’t anything major but have to do with minor annoyances. In other words, no real deal-breaker, here. But there’s a few things I wish I had realized before I committed on FatCow with a domain name I actually want to use.

Something which was almost a deal-breaker for me is the fact that FatCow requires payment for any additional subdomain. And these aren’t cheap: the minimum is 5$/month for five subdomains, up to 25$/month for unlimited subdomains! Even at a “regular” price of 88$/year for the basic webhosting plan, the “unlimited subdomains” feature (included in some webhosting plans elsewhere) is more than three times more expensive than the core plan.

As I don’t absolutely need extra subdomains, this is mostly a minor irritant. But it’s one reason I’ll probably be using another webhost for other projects.

Other issues with FatCow are probably not enough to motivate a switch.

For instance, the PHP version installed on FatCow (5.2.1) is a few minor releases behind the one needed by some interesting web applications. No biggie, especially if PHP is updated in a relatively reasonable timeframe. But still makes for a slight frustration.

The MySQL version seems recent enough, but it uses non-standard tools to manage it, which makes for some confusion. Attempting to create some MySQL databases with obvious names (say “wordpress”) fails because the database allegedly exists (even though it doesn’t show up in the MySQL administration). In the same vein, the URL of the MySQL is <username>.fatcowmysql.com instead of localhost as most installers seem to expect. Easy to handle once you realize it, but it makes for some confusion.

In terms of Fantastico-like simplified installation of webapps, FatCow uses InstallCentral, which looks like it might be its own Fantastico replacement. InstallCentral is decent enough as an installation tool and FatCow does provide for some of the most popular blog and CMS platforms. But, in some cases, the application version installed by FatCow is old enough (2005!) that it requires multiple upgrades to get to a current version. Compared to other installation tools, FatCow’s InstallCentral doesn’t seem really efficient at keeping track of installed and released versions.

Something which is partly a neat feature and partly a potential issue is the way FatCow handles Apache-related security. This isn’t something which is so clear to me, so I might be wrong.

Accounts on both BlueHost and HostGator include a public_html directory where all sorts of things go, especially if they’re related to publicly-accessible content. This directory serves as the website’s root, so one expects content to be available there. The “index.html” or “index.php” file in this directory serves as the website’s frontpage. It’s fairly obvious, but it does require that one would understand a few things about webservers. FatCow doesn’t seem to create a public_html directory in a user’s server space. Or, more accurately, it seems that the root directory (aka ‘/’) is in fact public_html. In this sense, a user doesn’t have to think about which directory to use to share things on the Web. But it also means that some higher-level directories aren’t available. I’ve already run into some issues with this and I’ll probably be looking for a workaround. I’m assuming there’s one. But it’s sometimes easier to use generally-applicable advice than to find a custom solution.

Further, in terms of access control… It seems that webapps typically make use of diverse directories and .htaccess files to manage some forms of access controls. Unix-style file permissions are also involved but the kind of access needed for a web app is somewhat different from the “User/Group/All” of Unix filesystems. AFAICT, FatCow does support those .htaccess files. But it has its own tools for building them. That can be a neat feature, as it makes it easier, for instance, to password-protect some directories. But it could also be the source of some confusion.

There are other issues I have with FatCow, but it’s probably enough for now.

So… On to the installation process… 😉

It only takes a few minutes and is rather straightforward. This is the most verbose version of that process you could imagine…

Surprised? 😎

Disclaimer: I’m mostly documenting how I did it and there are some things about which I’m unclear. So it may not work for you. If it doesn’t, I may be able to help but I provide no guarantee that I will. I’m an anthropologist, not a Web development expert.

As always, YMMV.

A few instructions here are specific to FatCow, but the general process is probably valid on other hosts.

I’m presenting things in a sequence which should make sense. I used a slightly different order myself, but I think this one should still work. (If it doesn’t, drop me a comment!)

In these instructions, straight quotes (“”) are used to isolate elements from the rest of the text. They shouldn’t be typed or pasted.

I use “example.com” to refer to the domain on which the installation is done. In my case, it’s the domain name I transfered to FatCow from another registrar but it could probably be done without a dedicated domain (in which case it would be “<username>.fatcow.com” where “<username>” is your FatCow username).

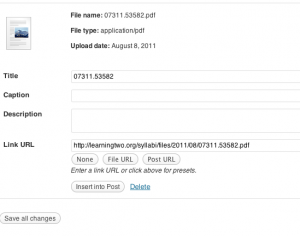

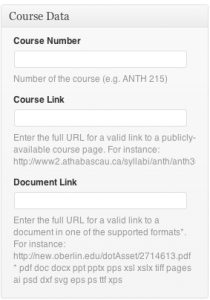

I started with creating a MySQL database for WordPress MU. FatCow does have phpMyAdmin but the default tool in the cPanel is labeled “Manage MySQL.” It’s slightly easier to use for creating new databases than phpMyAdmin because it creates the database and initial user (with confirmed password) in a single, easy-to-understand dialog box.

So I created that new database, user, and password, noting down this information. Since that password appears in clear text at some point and can easily be changed through the

same interface, I used one which was easy to remember but wasn’t one I use elsewhere.

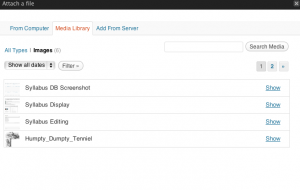

Then, I dowloaded the following files to my local machine in order to upload them to my FatCow server space. The upload can be done through either

FTP or FatCow’s

FileManager. I tend to prefer FTP (via

CyberDuck on the Mac or

FileZilla on PC). But the FileManager does allow for easy uploads.

(Wish it could be more direct, using the HTTP links directly instead of downloading to upload. But I haven’t found a way to do it through either FTP or the FileManager.)

At any rate, here are the four files I transfered to my FatCow space, using .zip when there’s a choice (the .tar.gz “tarball” versions also work but require a couple of extra steps).

- WordPress MU (wordpress-mu-2.9.1.1.zip, in my case)

- Buddymatic (buddymatic.0.9.6.3.1.zip, in my case)

- EarlyMorning (only one version, it seems)

- EarlyMorning-BP (only one version, it seems)

Only the WordPress MU archive is needed to install BuddyPress. The last three files are needed for EarlyMorning, a BuddyPress theme that I found particularly neat. It’s perfectly possible to install BuddyPress without this specific theme. (Although, doing so, you need to install a BuddyPress-compatible theme, if only by moving some folders to make the default theme available, as I explained in point 15 in that previous tutorial.) Buddymatic itself is a theme framework which includes some child themes, so you don’t need to install EarlyMorning. But installing it is easy enough that I’m adding instructions related to that theme.

These files can be uploaded anywhere in my FatCow space. I uploaded them to a kind of test/upload directory, just to make it clear, for me.

A major FatCow idiosyncrasy is its FileManager (actually called “FileManager Beta” in the documentation but showing up as “FileManager” in the cPanel). From my experience with both BlueHost and HostGator (two well-known webhosting companies), I can say that FC’s FileManager is quite limited. One thing it doesn’t do is uncompress archives. So I have to resort to the “Archive Gateway,” which is surprisingly slow and cumbersome.

At any rate, I used that Archive Gateway to uncompress the four files. WordPress µ first (in the root directory or “/”), then both Buddymatic and EarlyMorning in “/wordpress-mu/wp-content/themes” (you can chose the output directory for zip and tar files), and finally EarlyMorning-BP (anywhere, individual files are moved later). To uncompress each file, select it in the dropdown menu (it can be located in any subdirectory, Archive Gateway looks everywhere), add the output directory in the appropriate field in the case of Buddymatic or EarlyMorning, and press “Extract/Uncompress”. Wait to see a message (in green) at the top of the window saying that the file has been uncompressed successfully.

Then, in the FileManager, the contents of the EarlyMorning-BP directory have to be moved to “/wordpress-mu/wp-content/themes/earlymorning”. (Thought they could be uncompressed there directly, but it created an extra folder.) To move those files in the FileManager, I browse to that earlymorning-bp directory, click on the checkbox to select all, click on the “Move” button (fourth from right, marked with a blue folder), and add the output path: /wordpress-mu/wp-content/themes/earlymorning

These files are tweaks to make the EarlyMorning theme work with BuddyPress.

Then, I had to change two files, through the FileManager (it could also be done with an FTP client).

One change is to EarlyMorning’s style.css:

/wordpress-mu/wp-content/themes/earlymorning/style.css

There, “Template: thematic” has to be changed to “Template: buddymatic” (so, “the” should be changed to “buddy”).

That change is needed because the EarlyMorning theme is a child theme of the “Thematic” WordPress parent theme. Buddymatic is a BuddyPress-savvy version of Thematic and this changes the child-parent relation from Thematic to Buddymatic.

The other change is in the Buddymatic “extensions”:

/wordpress-mu/wp-content/themes/buddymatic/library/extensions/buddypress_extensions.php

There, on line 39, “$bp->root_domain” should be changed to “bp_root_domain()”.

This change is needed because of something I’d consider a bug but that a commenter on another blog was kind enough to troubleshoot. Without this modification, the login button in BuddyPress wasn’t working because it was going to the website’s root (example.com/wp-login.php) instead of the WPµ installation (example.com/wordpress-mu/wp-login.php). I was quite happy to find this workaround but I’m not completely clear on the reason it works.

Then, something I did which might not be needed is to rename the “wordpress-mu” directory. Without that change, the BuddyPress installation would sit at “example.com/wordpress-mu,” which seems a bit cryptic for users. In my mind, “example.com/<name>,” where “<name>” is something meaningful like “social” or “community” works well enough for my needs. Because FatCow charges for subdomains, the “<name>.example.com” option would be costly.

(Of course, WPµ and BuddyPress could be installed in the site’s root and the frontpage for “example.com” could be the BuddyPress frontpage. But since I think of BuddyPress as an add-on to a more complete site, it seems better to have it as a level lower in the site’s hierarchy.)

With all of this done, the actual WPµ installation process can begin.

The first thing is to browse to that directory in which WPµ resides, either “example.com/wordpress-mu” or “example.com/<name>” with the “<name>” you chose. You’re then presented with the WordPress µ Installation screen.

Since FatCow charges for subdomains, it’s important to choose the following option: “Sub-directories (like example.com/blog1).” It’s actually by selecting the other option that I realized that FatCow restricted subdomains.

The Database Name, username and password are the ones you created initially with Manage MySQL. If you forgot that password, you can actually change it with that same tool.

An important FatCow-specific point, here, is that “Database Host” should be “<username>.fatcowmysql.com” (where “<username>” is your FatCow username). In my experience, other webhosts use “localhost” and WPµ defaults to that.

You’re asked to give a name to your blog. In a way, though, if you think of BuddyPress as more of a platform than a blogging system, that name should be rather general. As you’re installing “WordPress Multi-User,” you’ll be able to create many blogs with more specific names, if you want. But the name you’re entering here is for BuddyPress as a whole. As with <name> in “example.com/<name>” (instead of “example.com/wordpress-mu”), it’s a matter of personal opinion.

Something I noticed with the EarlyMorning theme is that it’s a good idea to keep the main blog’s name relatively short. I used thirteen characters and it seemed to fit quite well.

Once you’re done filling in this page, WPµ is installed in a flash. You’re then presented with some information about your installation. It’s probably a good idea to note down some of that information, including the full paths to your installation and the administrator’s password.

But the first thing you should do, as soon as you log in with “admin” as username and the password provided, is probably to the change that administrator password. (In fact, it seems that a frequent advice in the WordPress community is to create a new administrator user account, with a different username than “admin,” and delete the “admin” account. Given some security issues with WordPress in the past, it seems like a good piece of advice. But I won’t describe it here. I did do it in my installation and it’s quite easy to do in WPµ.

Then, you should probably enable plugins here:

example.com/<name>/wp-admin/wpmu-options.php#menu

(From what I understand, it might be possible to install BuddyPress without enabling plugins, since you’re logged in as the administrator, but it still makes sense to enable them and it happens to be what I did.)

You can also change a few other options, but these can be set at another point.

One option which is probably useful, is this one:

Obviously, it’s not necessary. But in the interest of opening up the BuddyPress to the wider world without worrying too much about a proliferation of blogs, it might make sense. You may end up with some fake user accounts, but that shouldn’t be a difficult problem to solve.

Now comes the installation of the BuddyPress plugin itself. You can do so by going here:

example.com/<name>/wp-admin/plugin-install.php

And do a search for “BuddyPress” as a term. The plugin you want was authored by “The BuddyPress Community.” (In my case, version 1.1.3.) Click the “Install” link to bring up the installation dialog, then click “Install Now” to actually install the plugin.

Once the install is done, click the “Activate” link to complete the basic BuddyPress installation.

You now have a working installation of BuddyPress but the BuddyPress-savvy EarlyMorning isn’t enabled. So you need to go to “example.com/<name>/wp-admin/wpmu-themes.php” to enable both Buddymatic and EarlyMorning. You should then go to “example.com/<name>/wp-admin/themes.php” to activate the EarlyMorning theme.

Something which tripped me up because it’s now much easier than before is that forums (provided through bbPress) are now, literally, a one-click install. If you go here:

example.com/<name>/wp-admin/admin.php?page=bb-forums-setup

You can set up a new bbPress install (“Set up a new bbPress installation”) and everything will work wonderfully in terms of having forums fully integrated in BuddyPress. It’s so seamless that I wasn’t completely sure it had worked.

Besides this, I’d advise that you set up a few widgets for the BuddyPress frontpage. You do so through an easy-to-use drag-and-drop interface here:

example.com/<name>/wp-admin/widgets.php

I especially advise you to add the Twitter RSS widget because it seems to me to fit right in. If I’m not mistaken, the EarlyMorning theme contains specific elements to make this widget look good.

After that, you can just have fun with your new BuddyPress installation. The first thing I did was to register a new user. To do so, I logged out of my admin account, and clicked on the Sign Up button. Since I “allow new registrations,” it’s a very simple process. In fact, this is one place where I think that BuddyPress shines. Something I didn’t explain is that you can add a series of fields for that registration and the user profile which goes with it.

The whole process really shouldn’t take very long. In fact, the longest parts have probably to do with waiting for Archive Gateway.

The rest is “merely” to get people involved in your BuddyPress installation. It can happen relatively easily, if you already have a group of people trying to do things together online. But it can be much more complicated than any software installation process… 😉

45.559231-73.687999